The Client

The Client program is aimed to perform as a client for a voice over IP system. It is written and thus structured using g-like libraries in order to implement streaming, networking and graphical achievements and interfaces. Thus, the program is divided in four main parts which perform different tasks among the whole workflow.

General Introduction to The Client

The first part concern the initialization of the graphical user interface which is logically the first section to set up. Graphical interface has been created using gtk libraries which provides, like every g-programming-like library, a particular environment made up of structures, initialization functions and callback. To mantain a tidy organization of the program between his serveral conceptual sections there are a lot of global variable collected in relatives typedef structures to which function refers to. The most foundamental bricks of gtk libraries are programming structures called GtkWidets so that every single element in the programming chain refears to them. Next comes initialization functions which allow to set up entirely the conditions in which the program should work. In this phase it is loaded the basic layout of the GI such as main widgets (windows, boxes, buttons) and signals assigned to them. At the end comes callback assigned to event-driven signals and their behavior.

Clearly, callbacks funcitons are hardly interconnected with other g-libraries and are called to execute different tasks like networking or manipulating audio streaming. It is obvious that before enabling the user to the interaction with the program and the GI, it is necessary to initialize every step of the chain. To do that, the gtk's initialization is followed by the setting up of the other remaining libraries. Just before that, the main loop can start to run, giving the user the way to interact with the program.

As known, a client-server policy implies a strict intetraction between this two counterparts; as usual, this is achieved through the definition of specific communication protocols. In this particular case it has been decided to transfer protocol messages between server and client using UDP. This particular issue is carried out involving gnet libraries that are another example of g-programming and thus are structured in the same way. Once upon it has been associated to client and server a soket and its network properties (IP and port) thorugh the proper functions, it is possible to set up an UDP communication and thus an exchange of information and messages.

Being an audio-conference program, the Client must be able to receive, manipulate and send back audio streaming thorugh the network. This task is fulfilled by gstreamer libraries which let the programmer setting up a workflow pipeline made of conceptual blocks that play specific roles. As can be easly understood, the pipeline is the working heart of the entire system.

The behavior of a Client program in a VOIP Client-Server guideline is roughly described as follows. Firstly the Client send to the Server an application on the possibility to join an audio-conference and meanwhile it start to process the audio coming from the local microphone and sending it to the server. At this point the Server replies giving the Client an identification number called SSRC and sharing the whole audio stream collected from all the users connected, including the Client itself. It is obvious that before playing the audio stream recived from the Server, the Client must remove his own voice preventing the bothering phenomena called "echo". To do this the pipeline is provided with a filter that, after having gathered audio sampes from the microphone, can remove local voice from the main stream making use of correlation tecniques. Each of these stages are properly examined in their dedicated sections.

The Client Explained

Before going deeply inside the pipeline, it is important to state that a multimedial audio-conference system should attempt to severe timing constraints. In fact, huge delays in audio streaming could cripple down the real usefulness of the whole system. As a consequence, every single step of the program must take care about that problem and thus perform specific qualificatory features like efficiency and speed rates. The first pace on this way is using RTP protocol (Real Time Protocol) enveloped in UDP packages: that leads to use appropriate blocks in the pipeline such as the UDP source and the RTPDEPAY plus DECODER. On the transmitting side the same layout must be conserved and an RTPPAY plus ENCODER and an UDP sink are used to carry out this task.

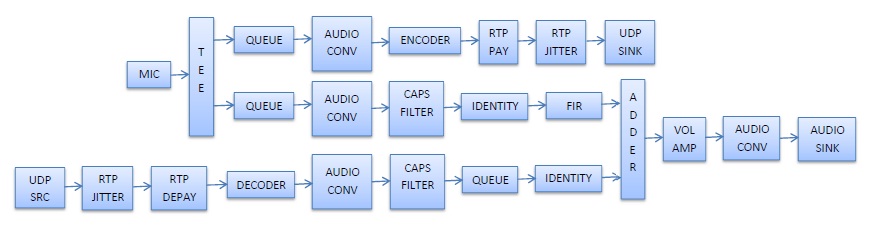

The understanding of the Client working procedures is sujected to the previous thoroughly comprehension of the pipeline. Here follows its detailed description, starting from the showing of its block scheme.

How can be seen there are three main branches, which can be identified as the "Encoding Path" ( the upper one), the "Elaboration Path" (in the middle) and the "Decoding Path" (the lowest).

The Encoding Path

In general each branch starts with a "queue" element that forces the pipeline to create new threads and spreads working loads in order to reduce synchronization problems between each component. So, after being splitted in two streams, audio samples coming from the microphone are pushed toward the Encoding Path which is managed by a separate thread due to the queue element.

Since sometimes elements in a pipeline can't handle the same stream capabilites, it is necessary to use Audioconverters that cover the conversion of a wide range of audio types and allow the link between elements that don't share the same stream caps.

To reduce the burden of a raw samples transmission, they are previously compressed in a non-linear PCM a-law encode. Than, the resulting stream can be used to build RTP packets which are subsequently sent toward the network through UDP protocol.

The Elaboration Path and the Decoding Path

The structure of remaining branches acts to play the audio recived, after having avoided echo effect. Over The Decodig Path, mixed audio is resolved from PCM a-law to raw samples which is bounded to assume specific capabilities by the caps filter. On the Elaboration side, the same constraint are applied on stream capabilities to audio samples from the local microphone.

The basic guidelines for attemping to remove echo effect are quite simple to explain but not so easy to implement: it's just about to subtract from the mixed audio, an adapted version of the samples collected from the microphone. This means that before performing the removal of the unwanted voice, original samples from the microphone must be shifted in time and reduced in amplitude in a proper way. While amplitude estimate on a digital signal is quite easy to handle, delay time evaluation has become the real problem to cope with because gstreamer libs don't dispose any kind of plug-in that can be used in that way.

Talking theoretically, the estimate of the delay can be carreid out by making a correlation calculation between a buffer containing audio samples from the microphone and a buffer collecting mixed audio samples. As a consequence, audio stream have to be manipulated in order to get in the best conditions to perform correlation evaluation: its capabilities becomes fixed and well known because of caps filters and audio stream is parsed so that its buffer's samples could be extracted into usual allocable memory.

The direct access to audio samples outside the structure of a plugin does not represent a natural way to procede with gstreamer libs but rather appears to be a hack-like acting. On the other hand, this was the only way that avoided the building of dedicated plugin which is still now an achievement that far outweights our competence in matter. By the way, the task of getting buffers from audio streams is performed by identity blocks in Elaborate and Decoding Paths. Each time one of the buffers has been filled, it is called a function callback that extract samples and stack them to a larger one. Meanwhile, another indipendent thread waits the larger buffer to be filled and than perform correlation. The position over the time of the maximum amplitude peak of the correlation returns the delay estimation which is used to set a property of the fir filter along the Elaborate Brench. Hence, after having properly delayed the incoming streaming, the fir filter invert its amplitude and push it to the adder. Once the adder executes the sum of the approaching streams, the result is an outcoming signal without echo.

User Approach and solutions for the Source Code

Since g-programming libraries introduce an object-like programming language, it is necessary to arrange their several structures in a tidy and easy-to-understand organization. Moreover, the need to keep variables scope inside callback and functions, lead to set them as global ones. Each conceptual block of variables are than stacked in proper structure pointers which memory is therefore allocated in the main function, avoiding segmentation faults. Every time one some allocated resources does not need anymore, it is immediatly released to prevent memory lacks.

As said above, gst, gnet and gtk libraries are a subpart of a more general programming style called g-language. Coming from the same root, this libraries share some distinctive features such as the possibility to "be looped" (put in run) and "be stopped" using a unique dedicated function even if at a first sight they seems so different one from the other.

Initialization section allows to set up all the environmental context needed for the client to work: UI layout is loaded, internet addresses are defined and the pipeline is set to null mode. Then the main windows is showed to the user and the g-loop is put on run. From this time on, the user can interact with the program.

Example of the Client Application

The first action a user should do is to change it's Default nickname and Default server address and port. Than the user can procede to get himself authenticated by pushing the appropriate button. Authentication procedure is a bit complicated: for first client and server socket are created in order to set up an UDP handshake service. Than the Client send an authentication Enquiry to the server through its socket and waits for a reply. Joined to a positive rensponse, the Server answers with a unique identification number called SSRC that the Client will use to mark its RTP streams in order to have them recognized. On negative rensposes from the server, instead, authenticate callback simply returns.

To set up a continuos watch on UDP handshake socket so that a callback can be executed on UDP packets recived, an I/O channel has been created and a watch function has been applied to it. As a final step, the pipeline state is changed from "Null" to "Ready".

Once Authentication has been correctly carried out, the User can approach to connection button. By pressing it, the pipeline simply starts to play: audio streams start to flow thorugh each branch, local samples are compressed and sent via UDP and echo effect is removed from mixed audio that can be played on speakers then.

On disconnection Request, instead, a several number of actions have to be performed. The most important thing to do is to remove watching functions from I/O channle linked to service UDP socket in order to avoid a jump of CPU usage on over 90%. Then pipeline state is setted to Null and a Disconnection Enquiry is sent to the server. On a positive rensponse, the socket is deleted and the Client returns to its basilar state.

An important note concern the need of I/O channels and watch functions. Gnet functions that allow UDP based exchanges, lets the programmer choose between write and read functions. Especially, read functions are blocking ones and thus must be used only when something like a reply is expected to be received. When the pipeline is playing there's no way to monitor UDP socket with read functions except maybe the use of a separate thread. An elegant solution comes from watch functions which can associate to the incoming UDP event, the execution of a callback in order to manage received messages.

In this version of the program, the only asinchronous communication that can occour between client and server during the connected mode is the which one brings information about participats to the conference. Each time the list of participants is modified due to new connections or disconnections, a refreshed list is sent to all the remainig users.

Each single action performed by the Client and every string transmitted or recived, will be stacked in a log windows placed on the bottom of the main window. Moreover, a list of users connected is showed on its right side. There's also a widget that can control the volume outcoming from local speakers.