Like is said in the introduction the server has the simple task to mix together the different audio streams sent by the clients. Before continue with the explanation of the server structure it is better give a brief description of the UDP-RTP protocol.

Why UDP/RTP?

The choice to use the UDP protocol was compulsory since, like already said, the server has to send the stream to the client in broadcast. Consequently we could not choose the TCP because this protocol is Connection-oriented and does not permit a broadcast communication. Unlike the TCP the UDP supports the broadcast communication, but it essentially provides only a level four address (port number), there is no sequence number in the UDP header in order to control the right reception of the packets. Usually when we have to deal with real-time streaming over the networks, also the RTP (real-time transport protocol) is used. This protocol provides two important features:

- The sequence number, which means that we can check if the packets are received in the right sequence

- The SSRC, which is a number of 32 bits that uniquely identifies the source of a stream. In this way the server is able to understand from which client comes from the selected stream. The importance of the SSRC will become more clear in the next sections.

Some tests showed that, especially with wireless networks, the use of RTP lead generally to a better quality of the communication.

Usually RTP is used coupled with RTCP (real-time transport control protocol). RTCP is a protocol that collects statistical information about the QoS (Quality of service) of the RTP stream. RTCP packets bring information on the quantity of lost packets and on the identity of the host, this in order to control that users have always different SSRC. Initially the application was expected to deal with both of the protocols, RTP and RTCP, but since some tests proved that there was not any real improvement in the quality of the communication with or without the RTCP, we decided to use only the RTP streams.

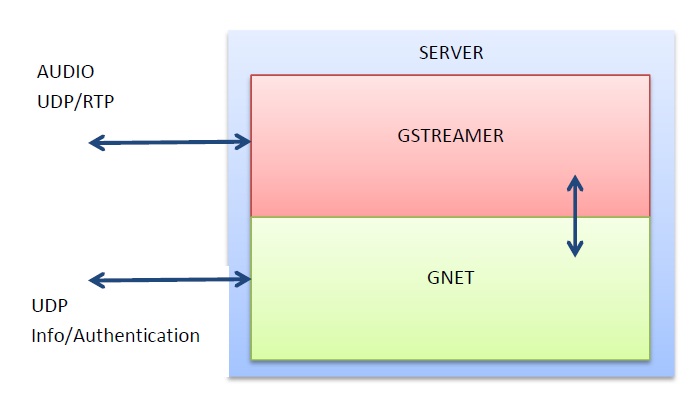

The server is essentially divided in two parts, the first one, which we can call the gstreamer-part, receives, elaborates and re-sends the audio streams, and the second part, the gnet-part, that communicates with the clients for the authentication and other information.

The Gstreamer part

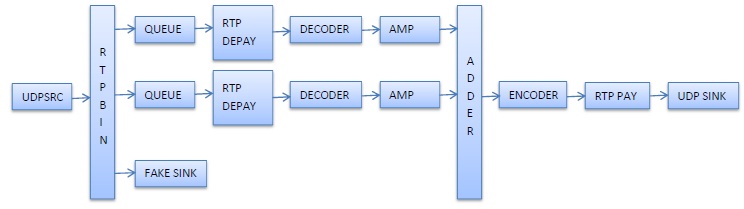

The gstreamer-part contains the pipeline, which structure is shown in the picture. We can study the pipeline by analyzing the single elements.

UDPSRC - This elements picks up UDP packets received from a selected port that can be choose modifying the element's properties. The element sends through the content of the packets to the next element.

RTPBIN - This is maybe the most important element of the server pipeline. This powerful element works with RTP and RTCP streams, it is able to separate different streams by checking their SSRC, it can deal with the network jitter, and it is also able to manage on its own the RTCP streams coupled with the RTP streams. The skill of the RTPBIN to separate the streams by their SSRC has permit to use one single UDP socket for receive the streams from the clients, and therefore keep busy only one port of the server for all of the clients, instead of one port for each client. Like said before the RTCP features of the element are not used in this project. However, the RTCP can be added by simply modifying a few lines in the program. As can be seen in the figure the RTPBIN has different sink pads, these pad are created dynamically. Every time that a new RTP stream, identified by its SSRC, is received by the UDPSRC element the RTPBIN creates a new pad for that RTP stream. The creation process is automatic, and at the same time the elements emits the "pad-added" signal, this signal is connected to a callback that is executed every time a new pad is created. In this callback is checked what is the SSRC of the new stream, if the SSRC is on the authorized list then, the stream comes from a new user, and it must be mixed with the other ones. On the contrary if the SSRC is non-authorized then it must not be mixed with the other streams. Depending on the SSRC, if is authorized or not, the callbacks connects different type of elements to the new pad. After that no packets of one of the RTP streams are no longer received, the RTPBIN removes the pad and the "on-sender-timeout" signal is emitted. This signal is connected to a callback, later will be discussed the function of this callback.

QUEUE - These elements does not modify the stream but simply separate the pipeline into different threads. A queue element should be used each time there is a fork in the pipeline, because each branch of the pipeline should have his own thread in order to avoid synchronization problems.

RTPDEPAY and DECODER - In the case of authorized SSRC the RTP stream must be mixed with the other ones. Before mixing the stream it has to be decoded. This is made by the "rtppcmadepay" element that rebuilds the PCM a-law audio stream, and by the "alawdec" which transforms the PCM a-law audio stream in a linear raw audio stream, this type of stream can be handled by the ADDER element to mix the streams.

AMPLIFIER - This element simply multiply the audio samples of the stream by a number that is inversely proportional to the number of the participants to the audio conference. In other words, if there are 2 participants the samples are multiplied by 1/2, with 3 participants they are multiplied by 1/3 and so on. This is done in order to avoid the saturation of the mixed audio stream.

ADDER - This element simply adds the audio samples that arrive at its inputs.

RTPPAY and ENCODER - After the different streams are mixed together, the resulting stream must be resent to the clients. For doing this we have to encode the raw audio stream in a PCM a-law one, and this is made by the "alawenc", secondly the stream must be encapsulated in RTP packets and this is made by the "rtppcmapay" element.

UDPSINK - Finally the UDP-RTP packets are sent in broadcast over the network by the UDP sink element.

FAKESINK - In the case that the SSRC is not authenticated, the stream must not be mixed with the other ones, so a fakesink is connected to the new pad. A fakesink is a sort of black-hole for data, it provides to periodically empty the received buffers. Even if we are not interested at all to the data, which arrives from an non-authorized SSRC, we cannot leave the pad floating. This because the buffers have to be empty in order to unref the memory used and prevent program errors.

The Gnet part

The gnet part receives and sends request and information from and to the clients. The gnet part is essentially composed by an UDP socket and a callback function that is executed every time a new packet is received. Depending on the packet's content the callback does what is necessary. There are three different types of request:

- Authentication request The client send to the server a certain string followed by the nickname of the client, if the server is not full (the maximum number of participants is 8) it sends to the client a random generated SSRC, different from all of the SSRC used by the other users, and puts this SSRC in the list of the authenticated SSRC.

- Disconnection request When a participants wants to leave the session it has to inform the server of this. This must be done in order to prevent communication problems to the other participants of the conference.

- Address info If the client sends an address info request the server will answer to the client indicating the port where the server is listening for incoming RTP streams, and the multicast/broadcast address, IP and port number, of the mixed stream.

There is also one more type of communication that the server sends to the participants to the audio conference. Every time a new user logs in or logs out of the session the server will send to all of the participants the number and the list of the users nickname that remains logged in. This is made for two reasons, firstly let know to the client the number of the participants in order to simplify the task of the echo filter, because if the filter knows how many participants there are then, it already knows what is the attenuation factor. The second reason is simply to let know to the user who are the other participants to the conference.

User Disconnection

At this point the description of the server's structure is almost complete, there are only few details left to describe and explain.

When a disconnection request is sent to the server by a client the server modifies the pipeline removing the RTPDEPAY and the DECODER for that user and connecting a fakesink. The question is why the server does this? and why the client cannot simply stop the stream to exit from the conference?

To understand this we have to know how the adder works. We said that the adder simply sums the audio samples present at its inputs, but if one input has no samples then the pipeline will stop, because the adder does not find the samples. We have to guarantee that there are always audio samples at every input of the adder. In fact if a user leave the session without informing the server the pipeline will stop and no RTP packets will be sent. In this case becomes helpful the "on-sender-timeout" signal emitted by the RTPBIN. When this signal is emitted it means that a sender is not sending RTP packets anymore so the callback will remove the elements connect to the relative sender's pad of the RTPBIN. If the pad was connected to a fakesink then it means that it was a non authenticated sender or that it was a user that has already informed the server of its leaving. On the contrary if the pad was connected to a RTPDEPAY then it means that a user has stopped to send packets without inform the server, the server will remove from the list of the participants the user that stopped to send the packets and it will restore the audio conference for the remaining participants.

This complicated procedure could be avoided by using instead of the "adder" the "liveadder" element, this is another element available in the gstreamer libs, and permits to mix together discontinues audio streams. Liveadder does not require to be present audio samples at all of its input, if an input has no samples then the input will simply be ignored and the element will process only the available data without blocking the pipeline. Unfortunately this elements has a dangerous bug, it seems that it does not unref all the memory that it uses, and so use this elements means that the memory usage of the server application will increase indefinitely.

Example of how the Server works

Conclusions

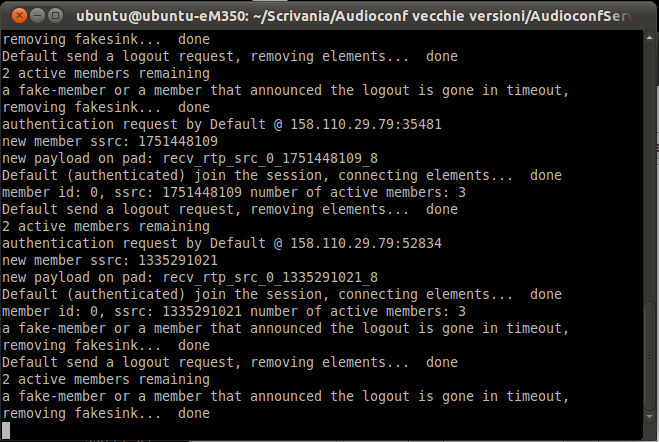

The structure of the server is quite linear and easy to understand, tests over the server application have not show any important bug. The procedure of authentication is not essential for the basic operation of the program but it has been made to ensure a sort of minimal level of security to the application. The server is a terminal application without GUI, the application notifies in the terminal all the action done, authentication request, state of the pipeline...