private async Task AssistantSetup()

{

// Initialize recognizers (speech-to-text) and speaker (text-to-speech)

Windows.Globalization.Language language = new Windows.Globalization.Language("en-US");

background_recognizer = new SpeechRecognizer(language);

task_recognizer = new SpeechRecognizer(language);

speaker = new SpeechService("Male", "en-US");

task_recognizer.Timeouts.InitialSilenceTimeout = TimeSpan.FromSeconds(10);

task_recognizer.Timeouts.EndSilenceTimeout = TimeSpan.FromSeconds(5);

// Initialize media player

media_player = BackgroundMediaPlayer.Current;

await CreateSongsList();

// Compile recognizer's grammar

SpeechRecognitionCompilationResult background_compilation_result = await background_recognizer.CompileConstraintsAsync();

SpeechRecognitionCompilationResult task_compilation_result = await task_recognizer.CompileConstraintsAsync();

// If compilation has been successful, start continuous recognition session

if(background_compilation_result.Status == SpeechRecognitionResultStatus.Success)

await background_recognizer.ContinuousRecognitionSession.StartAsync();

await GUIOutput("Hi, I'm Alan. How can I help you?", true);

// Set event handlers

background_recognizer.ContinuousRecognitionSession.ResultGenerated += ContinuousRecognitionSessionResultGenerated;

background_recognizer.StateChanged += BackgroundRecognizerStateChanged;

media_player.MediaEnded += MediaPlayerMediaEnded;

media_player.CurrentStateChanged += MediaPlayerCurrentStateChanged;

}Finite-state machine structure

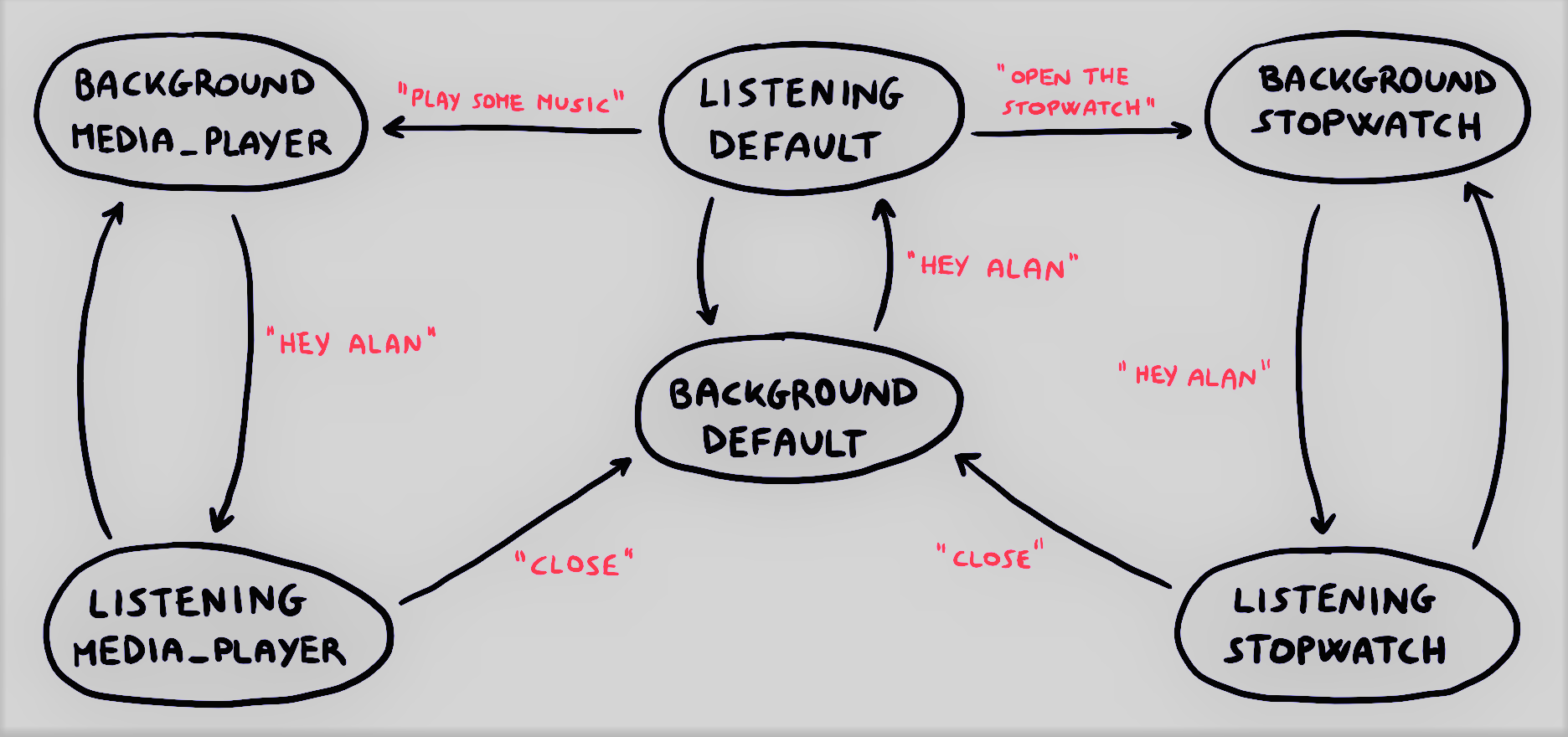

As we can see from the picture above, the software has been shaped as a finite-state

machine: the tasks that the assistant can carry out differ according to the current state

and the transitions between two states follow a specific pattern. The default state is

BACKGROUND_DEFAULT, an idle state where the assistant merely handles a

continuous speech recognition session; in particular, the program keeps recording the

speech until it detects the phrase "Hey Alan". When this happens, the continuous

recognition session is interrupted and the program goes into the state

LISTENING_DEFAULT. After that, the assistant says "I'm listening" in order to

notify the user that it is ready to carry out a task. Notice that, with the aim of

improving the interaction, the assistant says "I'm sorry, I couldn't understand"

whenever the pronounced command doesn't match any of the commands associated with the

available features. The states BACKGROUND_MEDIA_PLAYER and

BACKGROUND_STOPWATCH are similar to BACKGROUND_DEFAULT but they're

related to the features of media player and stopwatch, respectively. In these cases it is

still the sentence "Hey Alan" that triggers the transition to the corresponding

states LISTENING_MEDIA_PLAYER and LISTENING_STOPWATCH in which

the assistant expects a command related to the currently running feature.

Main code snippets

Let's take a deeper look to those parts of the code which allow to understand, at macro-level, how the assistant works. Specifically, we will analyze both the part which handles speech recognition and synthesis and the part concerning the choice of the action to perform according to the current state and the user's command.

In the first four lines we set English as language

for recognition and synthesis. Notice that there are two recognition agents (

background_recognizer and task_recognizer) and one synthesis

agent (speaker). The difference between background_recognizer

and task_recognizer is that the latter is used just to detect

single phrases (the commands) whereas background_recognizer runs a

continuous session. In particular, task_recognizer required us to set

the following timeouts:

InitialSilenceTimeoutsets the time interval during which the recognizer accepts input containing only silence before finalizing recognition.EndSilenceTimeoutsets the interval of silence that the recognizer will accept at the end of an unambiguous input before finalizing a recognition operation.

private async Task TaskSelection()

{

if(state == AssistantState.BACKGROUND_DEFAULT)

state = AssistantState.LISTENING_DEFAULT;

else if(state == AssistantState.BACKGROUND_MEDIA_PLAYER)

{

state = AssistantState.LISTENING_MEDIA_PLAYER;

media_player_volume = media_player.Volume;

ChangeMediaPlayerVolume(10.0);

}

else if(state == AssistantState.BACKGROUND_STOPWATCH)

state = AssistantState.LISTENING_STOPWATCH;

// Stop continuous recognition session and wait for user's command

await background_recognizer.ContinuousRecognitionSession.StopAsync();

await GUIOutput("I'm listening.", true);

SpeechRecognitionResult command = await task_recognizer.RecognizeAsync();

// The list of possible tasks changes according to the state

if(state == AssistantState.LISTENING_DEFAULT)

await StandardTasksList(command.Text);

else if(state == AssistantState.LISTENING_MEDIA_PLAYER)

await MediaPlayerTasksList(command.Text);

else if(state == AssistantState.LISTENING_STOPWATCH)

{

if(command.Text == "close")

CloseStopwatch();

else

await GUIOutput("I'm sorry, I couldn't understand.", true);

}

// Restart continuous recognition session

await background_recognizer.ContinuousRecognitionSession.StartAsync();

return;

}This piece of code performs state transitions (e.g. from

BACKGROUND_DEFAULT to LISTENING_DEFAULT) and selects the proper

command set according to the new state (StandardTasksList and

MediaPlayerTasksList are functions). Notice that, if the media

player is running (i.e. the current state is BACKGROUND_MEDIA_PLAYER),

the volume is automatically turned down in order to ease the understanding of the

vocal input.

private async Task StandardTasksList(string command)

{

if(command == "what time is it")

{

var now = System.DateTime.Now;

await GUIOutput("It's " + now.Hour.ToString("D2") + ":" + now.Minute.ToString("D2"), true);

state = AssistantState.BACKGROUND_DEFAULT;

}

else if(command == "what day is today")

{

await GUIOutput(System.DateTime.Today.ToString("D"), true);

state = AssistantState.BACKGROUND_DEFAULT;

}

else if(command == "tell me a joke")

await TellJoke();

else if(command == "play some music")

await PlayMusic();

else if(command == "save a reminder")

await SaveReminder();

else if(command == "any plans for today")

await SearchReminder();

else if(command == "tell me something interesting")

await RandomWikiArticle();

else if(command == "what's the weather like today" || command == "how's the weather today")

await GetWeatherInfos();

else if(command == "inspire me")

await GetInspiringQuote();

else if(command == "open the stopwatch")

await OpenStopwatch();

else if(command == "search a recipe")

await SearchRecipe();

else

{

await GUIOutput("I'm sorry, I couldn't understand.", true);

state = AssistantState.BACKGROUND_DEFAULT;

}

return;

}Here we can see the commands that can be given to the assistant when

its current state is LISTENING_DEFAULT. Every feature is managed

by a function except for time and date requests.