The aim of the project is to build a virtual piano by programming a DSP.

In the figure we can see the general outline of the project: the user moves the source S along the "virtual" piano;

source S emits a sound that is received by microphones 1 and 2;

then the DSP device, comparing the signals 1 and 2, determines the position of

S on the keyboard and produces a note of the desired frequency.

The aim of the project is to build a virtual piano by programming a DSP.

In the figure we can see the general outline of the project: the user moves the source S along the "virtual" piano;

source S emits a sound that is received by microphones 1 and 2;

then the DSP device, comparing the signals 1 and 2, determines the position of

S on the keyboard and produces a note of the desired frequency.

Therefore, th steps to follow for the construction are:

- Study the physical dynamics of the project according to the available tools;

- Learn how to program the DSP device;

- Construct the filters to clean up the signals;

- Develop in C programming how to receive signals and how to generate the response of the DSP in real-time;

- Simulate and test to obtain the best configuration.

The physical dynamics, according to the available tools, is based on the delay between the sounds received by

microphone 1 and microphone 2. This delay may unfortunately be influenced by several factors: frequency, noise,

sampling rate, distance between microphone and source. To simplify the work we use as source

a 2 kHz frequency sine wave.

Of course this sounds belongs to the range of audible frequencies by human ears (20H ~ 20KHz).

Anyway it is not necessary, as will be explained later, that the source intensity is elevate, so it does not create any

confusion with the sounds produced by the DSP device and we will not have any ear disturbs.

The distances between the instruments, in particular between the two microphones and the source, have some limitations caused by the detection of

delay: in fact the two microphones cannot be neither too close nor too far from the source, and not too distant

between them. In particular, the distance between the source and the microphones cannot exceed a wavelength away:

in fact, in this way you ensure that the phase detected by the device will be inside a certain range, corresponding to a wavelength,

in our case about 17/18 cm (speed of sound divided by frequency). With these measures, distance between microphones is 16 cm and 10 cm of orthogonality

with the source. So the sound will be detected by microphones, which send the signal to the DSP: the basic principle of the project is, in fact, calculate the delay

between the two signals and associate a note to a small range of values for the delay. For example, in our case we determined that the measure of each note is 2 cm, from the 440 Hz A

completing to the next G, while the microphones are 1 cm far from the sides of the keyboard. If you put the source exactly halfway between the two microphones, the delay will be almost nil and the note established is D.

TMS320C6713 DSP from Texas Instruments is the basic device signal processing. The device has numerous tutorials on the Internet, available in the downloads section.

To realize this type of project we use the audio input and output: input is for receiving signals

by the two microphones, while the output is used to generate the desired sound according to the position of the source. This device is equipped with a single stereo audio input, so a first problem starts because

the two microphones transmit a mono signal: to repair this problem we built a simple little device that receives the two signals from the microphones and convert them into a left / right stereo.

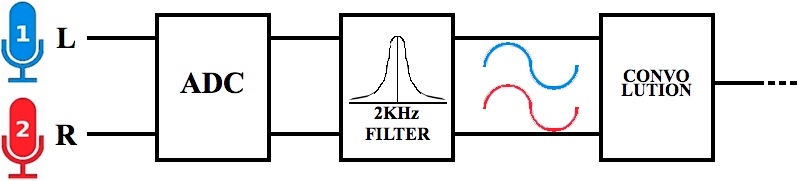

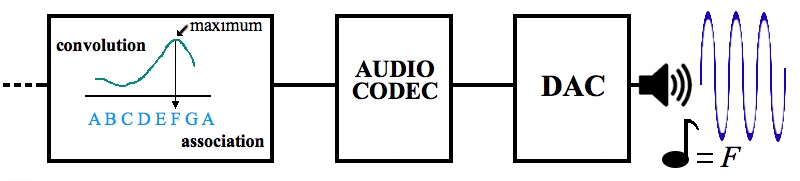

The diagram shows the behavior of the two signals inside the DSP: first, the two signals are converted to digital signals and they are filtered to obtain cleaner samples. The next step is convolution, in which

we can associate its maximum with the phase difference between left and right signal. This point represents the mathematical core of the project. Now we can associate small range of values

to the corresponding note we want. Then the DSP responds with real-time issuance of a note according to the signals delay received from two microphones;

of course the two last steps are the audio codec elaboration and the digital to analog convertion.

In this section we show some examples of real-time measurements made by the DSP; the videos show the last evolution of the virtual player piano. Here are some graphical examples of detection at different performance stages:

- Detection of the signals (left and right) without filter

- Bode diagram of the 2 Khz filter

- Detection of the signals (left and right) with the 2 Khz filter

- Wavelenght samples of signals for the convolution

- Convolution: the maximum will be associated to the desired note

This videos show the last evolution of the Virtual Piano:

Development problems:

The first problem during the development was a wrong idea about the source frequency: initially the source frequency was 100 Hz because we thought that this frequency is too low to hear.

Anyway 100 Hz is too sensitive and there were a lot of disturbs (expecially caused by 50 Hz electric network frequency) and filters were not able to confine these disturbs.

So we passed to a project with 150 Hz and after that to 200 Hz and even 1Khz. But all these projects were not so good as a source frequency 2 Khz project.

The final choice of source frequency limits the virtual piano's dimentions to a keyboard of 16 x 10 cm (almost near to real dimentions of 7 notes on a piano):

first projects, in fact, had huge distances of meters and that was a logistic problem.

The last but not the least problem was the place of research: the University laboratory temperature influenced the sound velocity, also electronic devices inside the laboratory may have influenced the results.

But, in general, it works!

The basic tools needed for the realization of the project are:

- Texas Instruments C6713 DSP Development Board:

DSP Texas Instruments TMS320C6713 is a device using 225 Mhz maximum range of frequencies anc computes at 1800 MIPs and 1350 MFLOPS. The device has 512K word of Flash Memory and 16 MB of SDRAM, four audio jacks of 3.5 mm for microphone, line in, speaker and line out and a stereo codec of 24 bit. External communications through USB port with JTAG interface. - Texas Instruments C6713 DSK Code Composer Studio: Code Composer Studio (version 3.1) is the compiling, linking, and debugging software. CCS software has also diagnostic tool for a correct usage of the device and option tools for design current signals inside the device (time or frequency dependence).

- Software Audacity: to create the 2 KHz sinewave. This is a mono signal, so the source can be a simple headphone.

- Microphones: two omni-directional microphones captures the 2 Khz sinewave. Microphones are the first cause of noise troubles.

- Speaker: share the desired note. The output is linked to the DSP.

- Electrical Wiring: we use simple elecrical wiring for audio trasmission. We need to connect the two mono signal detected by the microphones into an only audio cable for the DSP elaboration.

- technical document of the device

- second technical document of the device

- tutorial of code composer studio

This is the most important function of the entire project: the elaboration of the delay:

/***********************************************************************

Virtual_Piano.c

***********************************************************************/

void detect_delay(short *vectro_1, short *vector_2)

{

int convolution[40];

int x[130];

int y[130];

int k = 0, t = 0, max = 0, index_max = 0;

DSK6713_LED_on(0);

//init convolution:

for ( k = 0; k < 40; k++ )

convolution[k]=0;

//20 zeros before and after vector 1

for ( k = 0; k < 20; k++)

x[k] = 0;

for ( k = 20; k < 110; k++)//catching values from 100 index

x[k] = vector_1[k-20];

for ( k = 110; k < 130; k++)

x[k] = 0;

//move vector 2 to 20 early positions and shift 1 bit to 1 bit

for ( k = 0; k < 90; k++)

y[k] = vector_2[k];

for ( k = 90; k < 130; k++)

y[k] = 0;

for ( k = 0; k < 40; k++)// 40 y vector movements

{

for ( t = 0; t < 130; t++)

convolution[k] = convolution[k] + x[t]*y[t];

shift_right(y);// right shift of the vector

}

for ( m = 0; m < 40; m++)

{

if( convolution[m] >= max )

{ max = convolution[m];

index_max = m;

}

}

tone = index_max;

if( tone > 0 && tone < 7)

freq = 440; //A

else if ( tone >= 7 && tone < 11)

freq = 494; //B

else if ( tone >= 11 && tone < 17)

freq = 523; //C

else if ( tone >= 17 && tone < 21)

freq = 587; //D

else if ( tone >= 21 && tone < 28)

freq = 659; //E

else if ( tone >= 28 && tone < 33)

freq = 698; //F

else if ( tone >= 33 && tone < 38)

freq = 784; //G/*

else freq = 400; //if freq = 400, audio will be turned off

new_tone = freq;

if ( new_tone != old_tone) // control: passing to the next tone after 4 counts

{

tn++;

if ( ts >= 3)

{

tn=0;

old_tone = new_tone;

}

}

else

tn = 0;

freq = old_tone;

coef = PI*freq/48000;

DSK6713_LED_off(0);

}

- 67693 Marco Cargnelutti

- 71530 Elvis Kapllaj

- 76204 Marco Gava

This course held by : Prof. Riccardo Bernardini