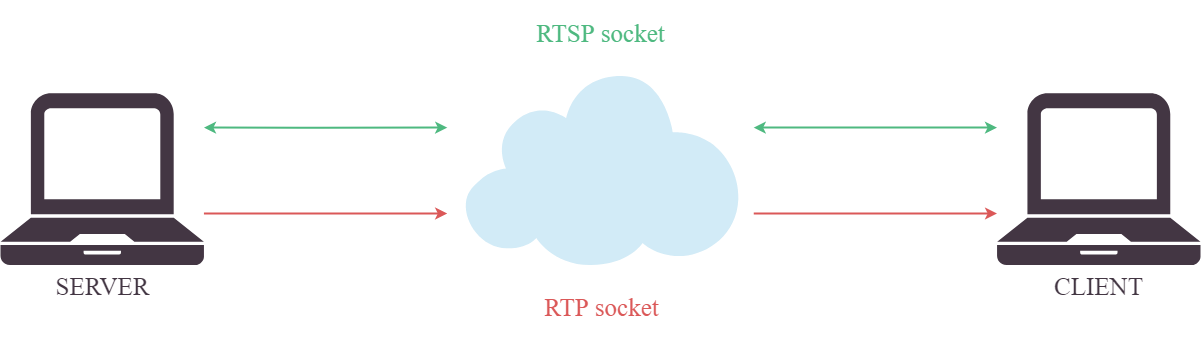

The overall system

The project relies on a base structure to actualize communication. This structure - the overall system - implements a server-client model, in which the server handles the audio recording and encoding, and provides the client with the encoded video, while the client deals with video/audio decoding and playing. In order to achieve real-time streaming the system creates two sockets: one using a modified version of the Real Time Streaming Protocol (RTSP) [1] for streaming control and key exchanging; the second using the Real-time Transport Protocol (RTP) [2] for video frames delivery. Requiring a low latency communication, the second socket is based on the User Datagram Protocol (UDP) [3] , transaction-oriented and unreliable, while the first socket makes use of the Transmission Control Protocol (TCP) [4] to avoid information loss caused by network problems.

Once the main server and client are launched, the client connects to the server, enstablishing the RTSP connection. The secret key necessary for the video encoding is exchanged with the Diffie-Hellman method [5], then the second connection is set up.

random_generator = secrets.SystemRandom()

self.private_key = random_generator.randrange(1, self.GROUP_SIZE)

self.partial_key = pow(self.PUBLIC_GENERATOR, self.private_key, self.PUBLIC_PRIME)

self.received_key : Optional[int] = None

self.secret : Optional[int] = None

def generate_secret(self, received_key: bytes):

self.set_received_key(self.bytes_to_int(received_key))

self.secret = pow(self.received_key, self.private_key, self.PUBLIC_PRIME)

return self.secret

Initialization and key computation with Diffie-Hellman

Audio recording and encoding are designed to comply with the video fps rate, so the data packets are sent by the server as soon as new audio data is available. Due to the influence of network conditions on data delivery, the client waits for an initial delay before playing it in order to guarantee a continuous listening.

frame = self._video_stream.next_frame()

audio_frame = self._read_next_audio_frame()

encoded_frame = self._encoder.encode(frame, audio_frame, seed);

rtp_packet = RTPPacket(payload_type=RTPPacket.TYPE.MJPEG, sequence_number=frame_number, timestamp=frame_number*self._server.FRAME_PERIOD, payload=bytes(encoded_frame)).get_packet()

self._rtp_socket.write(QByteArray(packet))

Server side packet creation and transmission

packet = self._rtp_socket.receiveDatagram().data().data()

packet = RTPPacket.process_packet(bytes(packet))

frame = np.frombuffer(packet.get_payload(), dtype=np.int8)

(decoded_frame, decoded_audio_frame) = self._decoder.decode(frame, seed)

Client side packet receiving and decoding

The system employs multi-threading intensively, which is necessary not only to manage two connections simultaneously, but also to operate its various functions and the graphic interfaces at the same time. By far, thread managing and signaling were the most complex aspects to implement in regard to the overall system.

self.rtsp_connection_thread = QThread()

self.rtp_connection_thread = QThread()

self.audio_player_thread = QThread()

self.rtsp_connection.play_request.connect(self.rtp_connection.start)

self.rtp_connection.video_frame_ready.connect(self.update_image)

self.rtp_connection.first_audio_frame_arrived.connect(self.audio_player.wait_audio_delay)

self.rtsp_connection.moveToThread(self.rtsp_connection_thread)

self.rtp_connection.moveToThread(self.rtp_connection_thread)

self.audio_player.moveToThread(self.audio_player_thread)

self.rtsp_connection_thread.start()

self.rtp_connection_thread.start()

self.audio_player_thread.start()

Example of thread creation and signal connection on the client side

Currently, the system works unilaterally, but it could be developed to include bidirectional communication, as in actual phone conversations.